No, “Learn to Code” Isn’t Worse Advice Than a Face Tattoo

A rebuttal to the “Face-Tattoo > Learn-to-Code” hot-take

Hey Takers,

Risk analyst Ian Bremmer recently quipped on TV that telling kids to “learn to code” is now worse advice than suggesting they get a face tattoo. This provocative claim grabs attention, but does it hold water? As a technologist, I have to chuckle at the hyperbole. In reality, coding as a skill is far from obsolete. Let’s dissect why this “learn to code is dead” narrative is misleading, and how to think strategically about coding in the age of AI.

Is learning to code really the worst career move, even worse than getting a bold face tattoo? Some pundits suggest it, but the data tells a more nuanced story.

The “Learn to Code” Backlash in the AI Era

Bremmer’s dire pronouncement came during a recent exchange on Real Time with Bill Maher. He argued that artificial intelligence has essentially eviscerated the “learn to code” cottage industry, implying that coding bootcamps and programming careers are becoming a dead end. Just five years ago, “learn to code” was considered sage advice for anyone seeking a stable, high-paying career in tech. Now, according to Bremmer, “That is literally worse advice now than ‘get a face tattoo.’ You can’t do worse than learn to code.”

What’s driving this dramatic shift in perspective? A few key data points and anecdotes have fanned the flames:

Surge in AI Coding Tools: AI-powered coding assistants (like GitHub Copilot and OpenAI’s new Codex agent) can auto-generate significant amounts of code. Bremmer and others note that AI is now doing many of the routine coding tasks that entry-level programmers used to do. In fact, autonomous coding agents are already making about 1 in 10 code contributions on GitHub, with OpenAI’s Codex agent achieving an astonishing 85%+ code acceptance rate (higher than human coders on average). Such stats make it seem like AI is rapidly encroaching on programmers’ jobs.

Bleak Job Stats for New Grads: The latest labor market figures show an uptick in unemployment for recent computer science graduates. The New York Fed’s report this year found CS majors had about a 6.1% unemployment rate, and computer engineering majors about 7.5%, higher than the ~5.8% average for all recent grads. In a twist of irony, new CS grads are now statistically less likely to find a job than even humanities majors like English or political science. That’s a shocking reversal for a field long thought to be a sure bet. No wonder headlines declared “Learn to Code Backfires Spectacularly as Comp-Sci Majors Suddenly Have Sky-High Unemployment.”

Anecdotes of Tech Turmoil: The tech industry has seen sweeping layoffs and hiring freezes in the past two years, leaving many programmers in the lurch. Bremmer pointed out that some formerly “cushy” software developers have resorted to selling their plasma to pay bills. (Indeed, one viral story profiled a laid-off tech worker advising others to literally sell blood or gig-drive to survive the downturn .) Meanwhile, big tech firms that once hired armies of coders are pausing junior hiring. It’s enough to make young people question if learning coding is a viable path, or if they’d be better off in a different field entirely.

Combine these trends and it paints a grim picture for coding careers, at least on the surface. It’s not just commentators like Bremmer; even some tech insiders have soured on the “learn to code” mantra. Tech founder Joe Procopio, for example, wrote an incensed Inc. magazine column arguing that telling everyone to “learn AI” is just “Learn to Code all over again” and that both slogans create a glut of mediocre talent that ends up displaced by automation. In his words, the coding bootcamp boom already “created a workforce of terrible coders, who are now easy targets to be replaced by AI slop coders.” Ouch.

So is it time to hang up our keyboards and toss the coding textbooks in the trash? Not so fast. The doomsayers are glossing over some crucial facts. Let’s dig deeper into why “learn to code” is not actually the worst advice in 2025 and might still be very smart move when done right.

Why the “Coding Is Dead” Narrative Is Overblown

There’s no denying that the programming job market has gotten tougher for newcomers. But declaring coding education useless is a gross oversimplification. Here’s why the obituaries for “learn to code” are premature:

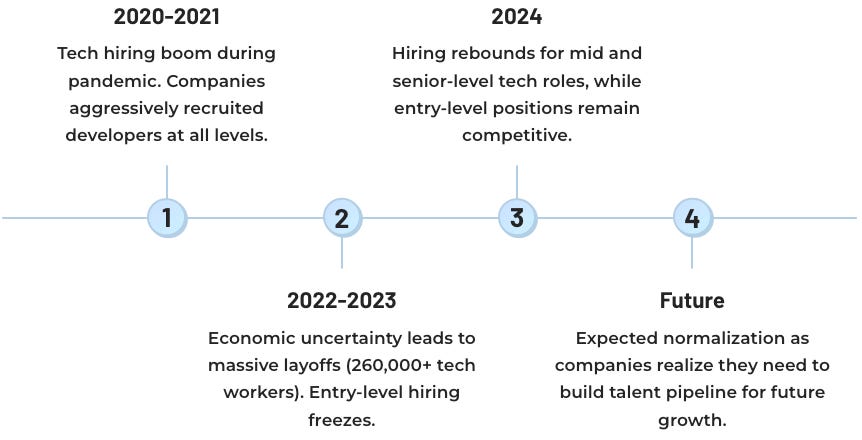

Tech Job Slump ≠ Death of Coding: The current job market weakness for programmers has more to do with an economic cycle than the fundamental value of coding. Remember, the past couple of years saw massive tech-sector layoffs over 260,000 tech workers were let go in 2023 alone, after many companies over-hired during the pandemic boom. Hiring of fresh grads subsequently froze up. SignalFire’s 2025 talent report shows entry-level tech hiring collapsed, with new grads making up 50% fewer hires now than pre-2020. It’s not just coders; entry roles in non-technical tracks (product, marketing, etc.) also evaporated as companies tightened belts. In other words, a lot of this is a post-boom correction. It’s not that coding skills suddenly became worthless, it’s that companies hit the brakes on all junior hiring, especially amid economic uncertainty and higher interest rates.

Notably, demand for experienced software engineers remains strong. While new grad opportunities fell, hiring rebounded in 2024 for mid- and senior-level tech roles. The SignalFire report notes that “even top computer science grads aren’t spared” from short-term pressures, but also that “AI hasn’t wiped out entire job categories, yet. So far, the fallout has hit new grads hardest, while demand for experienced engineers is still rising.” In plain terms, companies still need coders they’re just being pickier and favoring those who already have proven experience. Coding as a profession isn’t vanishing; the bar to entry is just higher at the moment.

Unemployment Stats Need Context: Yes, recent CS grads have higher unemployment right now than some liberal arts majors but percentages don’t tell the whole story. That 6 to 7% unemployment for CS includes a lot of people actively looking for the right job (sometimes holding out for the ideal role or higher pay). It’s also a snapshot during a unique downturn. Historically, computer science majors have enjoyed low unemployment and high salaries. In fact, despite the recent uptick in joblessness, the median starting salary for new CS graduates is still around $80,000 (with mid-career medians well into six figures). No offense to our English majors, but coding still pays quite a bit more on average!

Moreover, the Fed data shows this slump came after a period of extremely low unemployment for tech grads in 2021 to 2022. As the labor market stabilizes, those with coding skills are likely to find their footing. Overall U.S. unemployment for college grads is still low by historical standards. A 6% unemployment rate isn’t a lifetime sentence; it’s a cyclic bump. By contrast, getting a face tattoo… well, that’s a bit more permanent! (Let’s be honest: in most job interviews, having coding projects in your portfolio will impress more than having Mike Tyson-style facial ink.)

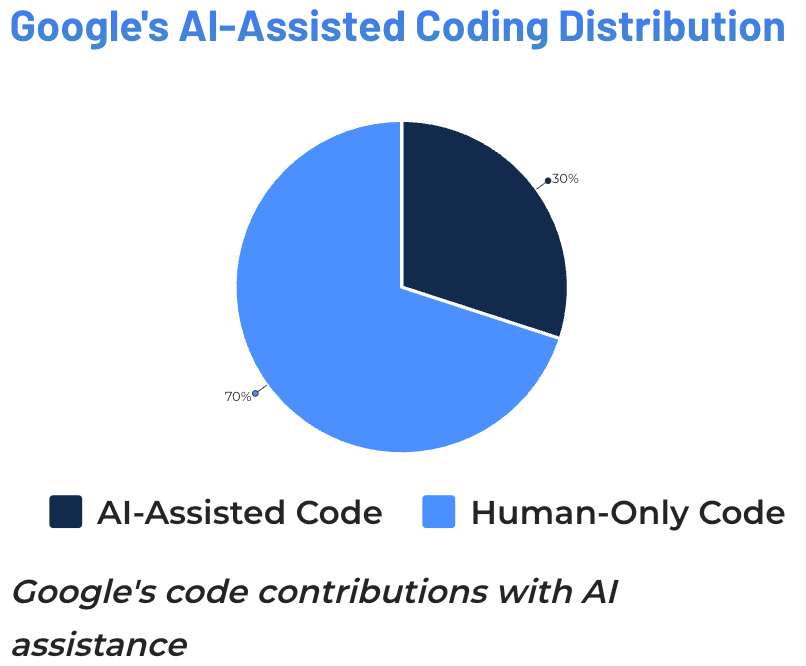

AI Helps Coders at Least as Much as It Hurts: The assumption behind “don’t learn to code, AI will do it” is that AI will replace human programmers outright. In reality, AI is turning into a power tool for programmers, not a wholesale replacement, at least for those who adapt. AI coding assistants accelerate the work of skilled developers. For instance, Google CEO Sundar Pichai recently noted that about 30% of code at Google now involves AI assistance, boosting engineering productivity by roughly 10%. He described AI as freeing programmers from tedious tasks and “making it even more fun to code,” not eliminating the need for them. Google is still hiring software engineers and Pichai remains optimistic that AI will “put creative power in more people’s hands… there’ll be more engineers doing more things.” That hardly sounds like a eulogy for coding careers. It sounds like an evolution.

It’s true that AI can churn out decent code for well-defined tasks. But coding isn’t just writing lines of syntax; it’s designing systems, solving ambiguous problems, and making judgment calls, so areas where human developers excel. Current AI models have severe limitations in those realms. They lack true understanding of a project’s goals, can’t design a complex software architecture from scratch, and notoriously produce errors if the problem deviates from their training data. Anyone who’s tried using ChatGPT to build a non-trivial app has likely encountered its spectacular failures on edge cases. As one exasperated developer remarked, “These LLMs will NEVER be able to manage a product from inception to post-mortem”, they’re simply statistical parrots, not visionaries. That might be an overstatement, but it highlights that human oversight remains crucial.

In practice, AI-generated code often contains bugs or security flaws that only human developers notice and fix. A recent analysis on AI in software development noted that while AI coding tools speed up mundane programming, they “cannot produce code with maintainability or scalability in mind like a human can.” AI code frequently needs refactoring and debugging by people, since the models don’t truly grasp the long-term implications of design choices. In fact, blindly accepting AI code can introduce so much technical debt that it ends up costing time. Thus, savvy companies use AI to assist their coders, not replace them. As a DevOps report bluntly concluded: “it will never replace developers. Instead, these tools will assist developers…enhancing the team’s throughput.”. Never replace is a strong phrase and perhaps “never” is a long time but at least for the foreseeable future, humans are still very much in the loop.

Coding Isn’t Just a Job, It’s a Literacy: Even if AI automates parts of programming, knowing how to code is akin to knowing how to do math. It’s a foundational skill in a digital world. Learning to code teaches computational thinking a way of breaking down problems into logical steps. This mindset is incredibly valuable beyond writing code itself. It enables you to understand what software can and can’t do, to communicate with developers and AI systems, and to creatively leverage technology in any field. In an era when every industry is being transformed by software and AI, understanding the basics of coding is empowering.

For example, the new mantra “learn AI” often really means learn how to use AI tools effectively. But guess what? To automate workflows or build AI-driven solutions, it helps immensely to have some coding ability. If you can script a quick Python data pipeline or tweak a piece of code an AI wrote for you, you’re ahead of the pack. Those who know how to code will boss the AIs around, not vice versa. On the flip side, those who lack any coding/technical background might be at the mercy of whatever the tools give them, without the skills to verify or customize the output.

5. The Market Adapts (and So Can People): The tech industry is famously dynamic. Yes, companies are currently favoring experienced developers and squeezing out entry-level roles. But this trend has downsides: if no one hires junior devs, who will become the senior devs of tomorrow? Forward-thinking firms recognize they must keep bringing in new talent to stay competitive long-term. We’re already seeing some mid-size companies snap up those unemployed CS grads to fill the gap left by big tech’s pause. Startups, too, often need scrappy coders to build MVPs cheaply, they can’t afford a team of highly-paid veterans. In short, opportunities still exist; they’ve just shifted location. Many coders are finding jobs in non-tech industries (finance, manufacturing, healthcare) which are all hungry for software skills as they undergo digital transformations. Coding isn’t confined to Google or Facebook.

There’s also a qualitative mismatch in the narrative. The claim “learning to code creates hordes of terrible coders” misses an obvious point: not everyone who learns to code aims to become a professional software engineer at a FAANG company. Some use coding as a tool in another career (automation in marketing, data analysis in biology, etc.). Others tinker with code to start their own indie projects or businesses. From robotics to web design to scientific research, coding knowledge opens doors. Dismissing “learn to code” wholesale ignores these diverse uses. We don’t discourage people from learning a musical instrument just because not everyone will play at Carnegie Hall; similarly, learning programming has intrinsic and practical value even if you don’t end up a Google engineer.

In sum, reports of coding’s demise are greatly exaggerated. However, the landscape is changing. The real takeaway is not “don’t learn to code” but rather “learn to code, and…“ meaning, learn to code smartly and in conjunction with other skills. Simply put, the advice needs an update for the AI era.

How to Future-Proof Your Coding Journey

If “just learn to code” was the simplistic advice of the 2010s, the 2020s demand a more strategic approach. Here’s a constructive take for aspiring coders and those navigating the shifting tech career winds:

Blend Coding with Problem-Solving Skills: Don’t just memorize syntax or churn out tutorial projects. Focus on the deeper concepts and problem-solving techniques behind coding. AI can generate boilerplate code, but humans excel at understanding real-world needs and devising creative solutions. Develop your analytical thinking, algorithmic reasoning, and ability to architect a program. These skills will remain in demand even as AI automates grunt work.

Master the “Why” and “What”, Not Just the “How”: Good programmers don’t only write code, they figure out what to build and why it matters for the end-user. Domain knowledge is key. If you pair coding expertise with understanding of a particular field (say finance, biology, education, art), you become exponentially more valuable. For example, an engineer who knows healthcare can build better medical software. So, learn to code and learn your domain. That’s something no AI can compete with, because context is king.

Embrace AI as Your Coding Sidekick: Rather than seeing AI as the enemy, make it your ally. Tools like Copilot can handle the boilerplate and suggest solutions, effectively increasing your productivity. The developers who will thrive are those who become “AI copilots” themselves, guiding the tools, double-checking outputs, and integrating AI-generated code intelligently. This means you should learn how to prompt these tools, how to review AI code for errors, and how to integrate it into larger projects. In short, learn to code with AI. It’s a skill set in its own right.

Build a Portfolio of Projects (that AI can’t easily clone): One way to stand out in a crowded field is to have done projects that show initiative and creativity. It’s one thing to solve cookie-cutter coding challenges; it’s another to build a small app or contribute to an open-source project. When you work on open-ended projects, you encounter all the messy, human-centric problems (design decisions, user feedback, unpredictable requirements) that AI isn’t great at handling. Your unique projects are proof of skills that go beyond rote coding. They demonstrate that you can see a project through something a pure code generator cannot claim.

Continuously Learn (It’s Not Just Coding Anymore): The “learn to code” slogan might be passé, but the real underlying advice, learn new skills continuously, is more relevant than ever. Today it might be Python and prompt engineering, tomorrow it could be something like human-AI interaction design or quantum computing. Be adaptable and keep an eye on emerging trends. The people who thought getting a CS degree 10 years ago was a ticket to 40 years of easy employment are now caught off guard. Don’t be that person. Commit to lifelong learning in tech, whether it’s coding, AI, data science, or whatever comes next. The ability to pick up new tools and knowledge quickly is itself one of the best skills you can have.

Finally, let’s address the elephant (or tattooed face) in the room: passion. If you enjoy coding, if you find it fulfilling to solve problems and create things in code, then don’t let doomsayers dissuade you. Motivation and genuine interest often lead to excellence. The world will always need excellent technologists. Yes, the tools will change, but passionate learners adapt to those changes. In contrast, chasing a career just because someone told you “this is where the money is” (or isn’t) is risky. Had you pivoted from coding to, say, learning to be a blockchain developer because it was the hot thing, you might have found that fad cooling too. So take all career advice (including this one!) with a grain of salt and consider your own strengths and interests.

Conclusion: Coding Isn’t “Over”, It’s Evolving

The sensational claim that “learn to code” is now the worst advice, worse than getting a face tattoo, makes for a great headline and a funny punchline. It’s a reaction to real shifts, the rise of AI and a turbulent job market, but it’s ultimately an exaggeration. Learning to code is still extremely useful, but the context around it has changed. We shouldn’t abandon teaching coding; we should update how we teach it and what additional skills we pair with it.

Rather than telling the next generation “Don’t learn to code,” we should be telling them: “Learn to code, but also learn to be adaptable, learn the fundamentals, learn the domain where you’ll apply it, and learn how to work alongside intelligent machines.” That may not fit in a snappy tweet, but it’s a lot more constructive (and accurate) advice.

In the end, I’d wager that having coding skills will age far better than a face tattoo in terms of life decisions. One is a gateway to a wide range of opportunities in our tech-driven world; the other is… well, a questionable fashion statement. So if you’re considering your future in the era of AI, here’s my take: by all means, learn to code, just don’t do it blindly. Stay curious, keep an eye on where technology is headed, and be ready to augment your coding with higher-level skills. The code may practically write itself in 2030, but the world will still need people who know what to build, how to integrate systems, and why it all matters. Face tattoos, optional. 😉